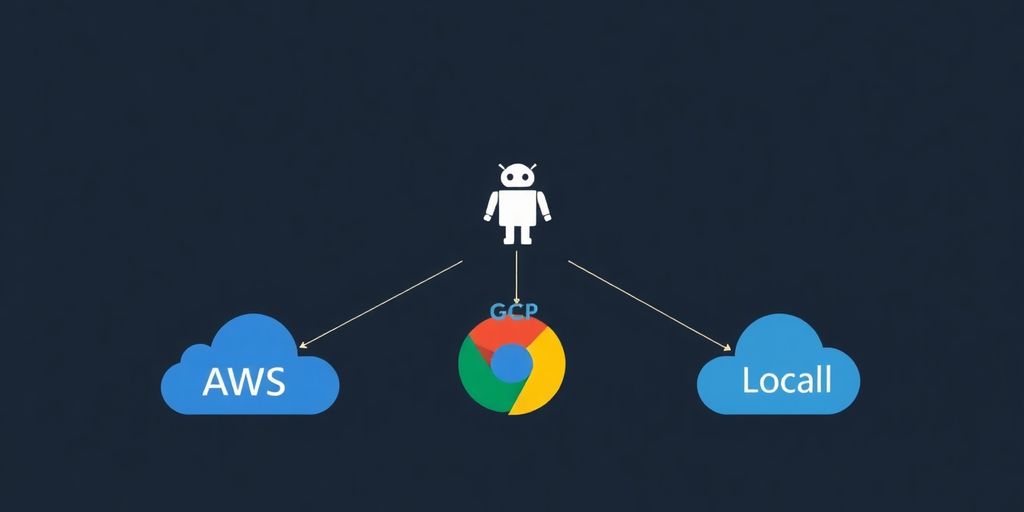

AI agents are becoming a big deal, and knowing how to host agents is key for anyone working with them. Whether you're looking to put your agents on a major cloud platform like AWS, GCP, or Azure, or you want to keep things running locally, there are good ways to do it. This article will walk you through the options, helping you understand what each platform offers and how to get your AI agents up and running smoothly. We'll cover everything from getting started to making sure your agents perform well and don't cost too much.

Key Takeaways

- Cloud platforms like AWS, GCP, and Azure provide different services (serverless functions, container services) for hosting AI agents, each with its own benefits for scalability and management.

- AWS offers services like Lambda for serverless agents and Fargate for containerized agents, allowing for flexible deployment options and integration with other AWS tools.

- Google Cloud provides Cloud Run for containerized agents and Cloud Functions for serverless setups, along with Vertex AI Agent Engine for more complex agent architectures.

- Microsoft Azure features Azure Functions for serverless agent deployment and Azure Container Apps for container-based solutions, integrating well with Azure's AI services.

- Local deployment of AI agents is useful for development and testing, often involving Docker containers and careful management of dependencies to ensure a stable environment.

Understanding AI Agent Hosting Requirements

Defining AI Agents and Their Functionality

So, what exactly is an AI agent? Well, simply put, it's a system designed to perceive its environment, make decisions, and then act to achieve specific goals. Think of it as a digital worker that can automate tasks, analyze data, and even interact with users. AI agents are pretty versatile.

They come in all shapes and sizes, from simple chatbots to complex systems that manage entire supply chains. The core capabilities usually involve things like natural language processing, machine learning, and the ability to connect to different APIs and data sources. It's all about giving them the tools they need to get the job done.

Key Benefits of Cloud Deployment for AI Agents

Why bother hosting your AI agents in the cloud? Turns out, there are some pretty compelling reasons. For starters, the cloud offers scalability. You can easily scale up or down depending on the workload, which is super handy if you have unpredictable traffic. Plus, you get access to a ton of different services and tools that can help you build and manage your agents more effectively.

Here's a quick rundown of the benefits:

- Scalability: Automatically adjust resources based on demand.

- High-Performance Compute: Access powerful hardware, including GPUs, when you need them.

- Integration: Connect securely with APIs, databases, and storage solutions.

- Global Reach: Deploy agents globally with low-latency endpoints.

- CI/CD Integration: Streamline updates with continuous integration and continuous deployment pipelines.

Scalability and Performance Considerations

Okay, so you're thinking about deploying your AI agent. Great! But before you jump in, you need to think about scalability and performance. How many users are you expecting? What kind of response times do you need? These are important questions to answer because they'll affect how you design and deploy your agent.

Choosing the right infrastructure is key. You might need to consider things like serverless functions, containerized deployments, or even dedicated virtual machines. It all depends on the specific requirements of your agent and the expected workload. Don't forget to factor in things like network latency and data storage too. It's a whole puzzle, but getting it right can make a huge difference in the long run.

It's important to monitor your agent's performance closely and make adjustments as needed. This might involve tweaking the code, optimizing the infrastructure, or even changing the architecture altogether. The goal is to find the sweet spot where you're getting the best possible performance without breaking the bank.

Hosting AI Agents on AWS

AWS offers a robust suite of services ideal for hosting and scaling AI agents. From serverless functions to containerized deployments, AWS provides the flexibility and resources needed to build and manage intelligent applications. Let's explore the options.

Leveraging AWS Lambda for Serverless Agents

AWS Lambda is a great choice for deploying serverless AI agents. It allows you to run code without provisioning or managing servers. This is especially useful for event-driven agents that respond to triggers such as data changes or user requests.

To deploy an agent on Lambda, you'll need to:

- Package your agent's code and dependencies into a deployment package.

- Upload the package to Lambda.

- Configure the Lambda function with the necessary resources and permissions.

- Set up triggers to invoke the function.

Lambda's automatic scaling capabilities ensure that your agent can handle varying workloads without manual intervention. Plus, you only pay for the compute time you consume, making it a cost-effective solution for many use cases. For example, you can use AWS Lambda to build custom AI agents with LangChain.

Deploying Containerized Agents with AWS Fargate

For more complex AI agents that require specific environments or dependencies, AWS Fargate provides a container-based deployment option. Fargate allows you to run containers without managing the underlying infrastructure. This is beneficial for agents that need custom libraries or have resource-intensive workloads.

Here's how to deploy an agent on Fargate:

- Create a Docker image of your agent.

- Push the image to a container registry like Amazon ECR.

- Define a task definition that specifies the container image, resources, and networking configuration.

- Create a Fargate cluster and run the task definition.

Fargate offers greater control over the environment and resources allocated to your agent. It's a good choice for agents that require dedicated resources or have specific performance requirements.

Integrating with AWS Services for Enhanced Functionality

One of the key advantages of hosting AI agents on AWS is the ability to integrate with other AWS services. This allows you to enhance the functionality of your agents and build more sophisticated applications.

Here are some examples of how you can integrate with AWS services:

- Use Amazon S3 for storing and retrieving data.

- Use Amazon DynamoDB for managing state and storing metadata.

- Use Amazon SQS for asynchronous communication between agents.

- Use Amazon SageMaker for training and deploying machine learning models.

Integrating with AWS services can significantly improve the performance, scalability, and reliability of your AI agents. It also allows you to take advantage of the rich ecosystem of tools and services that AWS offers.

By combining the power of AI agents with the capabilities of AWS, you can build intelligent applications that solve real-world problems. Whether you choose Lambda for serverless deployments or Fargate for containerized workloads, AWS provides the tools and resources you need to succeed.

Hosting AI Agents on Google Cloud Platform

Utilizing Cloud Run for Containerized Agent Deployment

Google Cloud Platform (GCP) provides several options for hosting AI agents, and Cloud Run is a strong choice for containerized deployments. It lets you run stateless containers via HTTP requests. This is great for AI agents that need to respond to user queries or other triggers.

Cloud Run offers a fully managed environment, so you don't have to worry about managing servers or infrastructure. You just deploy your container, and Cloud Run handles the rest. It automatically scales your agent based on traffic, so you only pay for what you use.

Here's a quick rundown of why Cloud Run is a good fit:

- Scalability: Handles traffic spikes without breaking a sweat.

- Simplicity: Deploy containers without managing servers.

- Cost-Effective: Pay-per-use pricing model.

Implementing Serverless Agents with Cloud Functions

Cloud Functions is GCP's serverless compute service. It's ideal for event-driven AI agents. If your agent needs to respond to events like new data in a database or messages in a queue, Cloud Functions can be a good fit.

With Cloud Functions, you write small, single-purpose functions that respond to specific events. GCP handles the infrastructure and scaling. This can significantly reduce operational overhead.

Consider these points when using Cloud Functions:

- Event-Driven: Perfect for responding to triggers.

- Low Overhead: No server management required.

- Integration: Works well with other GCP services.

Architecting AI Agents with Vertex AI Agent Engine

Vertex AI Agent Engine is a managed service designed specifically for running AI agents in production. It handles the infrastructure, scaling, security, and monitoring, so you can focus on building your agent's logic.

Vertex AI Agent Engine integrates with the Agent Development Kit (ADK) and Vertex AI's capabilities, such as Gemini models, evaluation tools, and monitoring dashboards. It's designed to take your agent from a development script to a robust, enterprise-grade application.

Vertex AI Agent Engine is Google Cloud’s dedicated platform for running AI agents in production. It integrates with agents built using the ADK and leverages Vertex AI’s capabilities. It handles infrastructure, scaling, security, and monitoring, allowing you to focus on building your agent's logic.

Hosting AI Agents on Microsoft Azure

Azure provides several options for hosting AI agents, each with its own strengths. Choosing the right service depends on your specific needs, such as scalability requirements, cost considerations, and integration with other Azure services. Let's explore the common approaches.

Deploying Serverless Agents with Azure Functions

Azure Functions are a solid choice for event-driven, serverless AI agents. They allow you to run code without managing servers, scaling automatically based on demand. This is ideal for agents that respond to triggers, such as HTTP requests or messages from a queue.

To deploy an agent using Azure Functions, you'll typically:

- Create an Azure Functions app.

- Write your agent's code in a supported language like Python or C#.

- Configure triggers and bindings to connect to other Azure services.

- Deploy the function app to Azure.

Azure Functions offers a consumption-based pricing model, making it cost-effective for workloads with variable traffic. It's a good fit for agents that don't require dedicated resources and can tolerate cold starts.

Containerizing Agents with Azure Container Apps

For more complex AI agents that require specific dependencies or configurations, Azure Container Apps offers a flexible containerization solution. You can package your agent into a Docker container and deploy it to Azure Container Apps, which manages the underlying infrastructure.

Key benefits of using Azure Container Apps include:

- Simplified deployment and management of containerized applications.

- Automatic scaling based on traffic and resource utilization.

- Integration with Azure DevOps for CI/CD pipelines.

Azure Container Apps is well-suited for agents that need a dedicated environment and consistent performance. It provides more control over the runtime environment compared to serverless functions.

Integrating with Azure AI Services

Azure AI services provide a suite of tools and APIs that can enhance the capabilities of your AI agents. These services include Azure AI Foundry Agent Service for building conversational AI, Azure Cognitive Services for tasks like natural language processing and computer vision, and Azure Machine Learning for training and deploying machine learning models.

By integrating with these services, you can:

- Add intelligent features to your agents without writing code from scratch.

- Automate tasks such as sentiment analysis, image recognition, and language translation.

- Build more sophisticated and responsive AI agents.

Integrating your AI agents with Azure AI services can significantly improve their performance and functionality. It allows you to focus on the core logic of your agents while offloading complex tasks to specialized services. This approach can also reduce development time and costs.

Local Deployment Strategies for AI Agents

While cloud platforms offer scalability and ease of management, local deployment of AI agents can be advantageous for development, testing, and specific use cases where data privacy or low latency are paramount. Let's explore how to effectively host AI agents on your local machine.

Setting Up a Local Development Environment

Creating a robust local development environment is the first step. This involves installing the necessary software and configuring your system to support AI agent development. A well-configured environment ensures smooth development and testing cycles.

- Install Python: Most AI agents are built using Python. Download and install the latest version from the official Python website.

- Set up a Virtual Environment: Use

venvorcondato create isolated environments for each project. This prevents dependency conflicts. - Install Required Libraries: Use

pipto install necessary libraries such as TensorFlow, PyTorch, or other AI-related packages. For example,pip install tensorflow.

Running Agents with Docker Containers Locally

Docker containers provide a consistent and isolated environment for running AI agents. This approach simplifies deployment and ensures that the agent behaves the same way across different systems. It's a great way to deploy AI agents consistently.

- Create a Dockerfile: Define the environment for your agent, including the base image, dependencies, and startup command.

- Build the Docker Image: Use the

docker buildcommand to create an image from your Dockerfile. - Run the Container: Use the

docker runcommand to start a container from your image. Map ports as needed to access the agent's services.

Managing Dependencies and Virtual Environments

Proper dependency management is crucial for avoiding conflicts and ensuring reproducibility. Virtual environments and dependency management tools help maintain a clean and organized project structure.

- Use

requirements.txt: List all project dependencies in arequirements.txtfile. This allows others to easily recreate the environment. - Regularly Update Dependencies: Keep your dependencies up to date to benefit from bug fixes and performance improvements. Use

pip install --upgrade <package_name>. - Version Control: Use Git to track changes to your code and dependencies. This makes it easier to revert to previous states if something goes wrong.

Local deployment offers control over your data and infrastructure. It's ideal for sensitive projects or when you need to minimize latency. However, it also requires more manual configuration and maintenance compared to cloud-based solutions.

Best Practices for AI Agent Deployment

Implementing Secure Credential Management

When deploying AI agents, it's important to handle credentials securely. I mean, you don't want your agent compromised, right? The best approach is to avoid hardcoding API keys or secrets directly into your code. Instead, use environment variables or a dedicated secret management service. This way, you can update credentials without modifying the code itself. Think of it as changing the locks on your house without having to rebuild the door.

- Use environment variables for storing sensitive information.

- Implement role-based access control (RBAC) to limit access.

- Regularly rotate API keys and tokens.

Establishing Robust Monitoring and Logging

Monitoring and logging are essential for understanding how your AI agents are performing and identifying potential issues. Without them, you're basically flying blind. Implement a comprehensive logging strategy that captures relevant information about agent behavior, errors, and resource usage. Set up alerts to notify you of any anomalies or performance degradation. This will help you proactively address problems and ensure your agents are running smoothly. It's like having a health checkup for your AI.

- Centralize logs for easy analysis.

- Set up real-time monitoring dashboards.

- Implement alerting for critical events.

Automating Deployments with CI/CD Pipelines

Automating deployments with CI/CD pipelines is a game-changer for AI agent development. It streamlines the deployment process, reduces the risk of errors, and enables faster iteration. By integrating your code repository with a CI/CD tool, you can automatically build, test, and deploy your agents whenever changes are made. This allows you to focus on developing new features and improving existing ones, rather than spending time on manual deployment tasks. It's like having a robot that handles all the boring stuff for you.

Automating deployments is not just about speed; it's about reliability and consistency. By using CI/CD pipelines, you can ensure that your AI agents are deployed in a consistent manner, reducing the risk of configuration errors and other deployment-related issues.

Here's a simple table illustrating the benefits:

| Benefit | Description |

|---|---|

| Faster Deployments | Automate the build, test, and deployment process. |

| Reduced Errors | Minimize manual intervention and potential human errors. |

| Increased Efficiency | Free up developers to focus on more important tasks. |

| Consistent Releases | Ensure consistent deployments across different environments. |

Consider using tools like GitHub Actions or Jenkins to set up your CI/CD pipelines.

Optimizing Cost and Performance for Hosted Agents

It's easy to get carried away with resources when you're setting up AI agents. But let's be real, nobody wants to break the bank or have things running slower than molasses. So, let's talk about keeping costs down and performance up.

Strategies for Cost-Effective Cloud Usage

Cloud costs can spiral if you're not careful. One thing I've learned is to really understand the pricing models of AWS, GCP, or Azure. Are you better off with reserved instances, spot instances, or pay-as-you-go? It depends on your workload. For example, if you're using AWS Lambda for serverless agents, you only pay for the compute time you actually use. That can be a huge win for intermittent tasks.

- Right-size your instances: Don't over-provision. Start small and scale up as needed.

- Use spot instances: For non-critical tasks, spot instances can save a ton of money.

- Implement auto-scaling: Scale resources up or down based on demand.

Another thing is to monitor your usage. Cloud providers have tools to track spending. Set up alerts so you know when you're approaching your budget. Also, look for idle resources and shut them down. No point in paying for something you're not using.

Performance Tuning for High-Throughput Agents

If your agents are handling a lot of requests, you need to make sure they can keep up. Performance tuning is all about finding bottlenecks and fixing them. Start by profiling your code to see where the time is going. Are you spending too much time on I/O, computation, or network calls?

- Optimize your code: Use efficient algorithms and data structures.

- Cache frequently accessed data: Reduce the need to fetch data from external sources.

- Use asynchronous operations: Don't block while waiting for I/O.

Consider using a message queue like RabbitMQ or Kafka to handle incoming requests. This can help decouple your agents and prevent them from being overwhelmed. Also, make sure your database queries are optimized. Slow queries can kill performance.

Choosing the Right Compute Resources

Picking the right compute resources is a balancing act. You need enough power to handle the workload, but you don't want to overspend. Think about whether you need CPUs, GPUs, or a mix of both. For example, if your agents are doing a lot of machine learning, GPUs can make a big difference. Google's own Agent Development Kit is a powerful, open-source Python (and Java) library built specifically to make it easy to develop agents that leverage Vertex AI (especially Gemini models) and are ready for deployment on GCP. It’s the recommended way to get started with agents in the Google Cloud ecosystem.

- Consider the workload: CPU-intensive vs. GPU-intensive.

- Evaluate different instance types: Compare performance and cost.

- Use benchmarks: Test different configurations to find the sweet spot.

It's important to remember that cost and performance are often intertwined. Sometimes, spending a little more on better resources can actually save you money in the long run by improving efficiency and reducing the need for manual intervention. Don't be afraid to experiment and find what works best for your specific use case.

Conclusion

So, we've gone over how to get your AI agents up and running, whether you're using AWS, GCP, or just keeping things local. Each option has its own good points and things to think about. Cloud platforms like AWS and GCP are great for when you need to scale up quickly or handle lots of users. They also give you access to powerful computing resources. If you're just starting out, or you want more control over your setup, running things locally can be a good choice. No matter which path you pick, the main idea is to choose what fits your project best. Think about how much traffic you expect, what kind of budget you have, and how much control you want over the technical details. Getting your AI agents deployed is a big step, and with these options, you're ready to make it happen.

Frequently Asked Questions

Why is it beneficial to host AI agents in the cloud?

Hosting AI agents in the cloud offers several key advantages. It allows for automatic scaling of your agents and their tasks, meaning they can handle more work when needed without manual adjustments. You can also get access to powerful computing resources, including special processors like GPUs, which are great for AI. Cloud hosting provides secure ways to connect with other services, like databases, and lets you set up your agents globally so they work quickly for users everywhere. It also makes it easier to update your agents and can be more cost-effective because you only pay for what you use.

How does AWS Lambda support the deployment of AI agents?

AWS Lambda is a 'serverless' option, meaning you don't have to manage any servers. It's great for AI agents because it runs your code only when it's needed, like when an event happens. This makes it very cost-effective for tasks that don't run all the time. To use it, you package your agent's code, write a small function that Lambda can run, and then deploy it. You can then set up triggers, like an API call, to make your agent work.

What are the primary options for hosting AI agents on Google Cloud Platform?

For Google Cloud, two main options are Cloud Functions and Cloud Run. Cloud Functions is similar to AWS Lambda, offering a serverless way to run your agent's code. Cloud Run is excellent for agents that are packaged in 'containers,' which are like self-contained software boxes. Cloud Run can automatically scale these containers up or down based on demand. For more advanced AI agent setups, Google's Vertex AI Agent Engine provides a specialized platform.

Which services does Microsoft Azure offer for deploying AI agents?

Microsoft Azure provides Azure Functions for serverless deployments, much like AWS Lambda and Google Cloud Functions. For containerized AI agents, Azure Container Apps or Azure Kubernetes Service (AKS) are suitable choices. These services allow you to package your agent and run it in a scalable environment. Azure also has a suite of AI services that can be easily connected to your agents for added capabilities.

What does local deployment of AI agents involve?

Local deployment means running your AI agent on your own computer or a server you control. This is often done for development and testing. You would typically set up a specific environment for your agent, often using tools like Docker to put your agent in a container. This ensures that your agent runs the same way everywhere. Managing dependencies, which are other pieces of software your agent needs, is also crucial for local setups.

What are some important best practices for deploying AI agents?

When deploying AI agents, it's important to keep them secure by carefully managing sensitive information like API keys. You should also set up good monitoring and logging so you can see how your agents are performing and if any issues arise. Automating the deployment process using CI/CD pipelines helps ensure that updates and changes to your agents are rolled out smoothly and consistently.